NOKIA: NOt a King In America !!

January 26, 2010Please keep visiting for insights, analysis, discussions on wireless technologies, business and trends at shahneil.com

————————————————————————————————————————

NOKIA as we all know (not sure about a common man in Northern America !!) is the world’s undisputed leader in Mobile Devices Technologies especially with greater dominance in Europe, Asia and Latin America markets.

But NOt a King In America !! Why??

To put more light on this conundrum, let’s analyze the growth of the mobile devices industry and the contribution of the industry leader over the last four years 2006-2009. This has been a significant phase for the big changes in mobile devices industry (advent and growth of smartphones, with new players entering with smart devices and hurting the incumbents’ sales..yes… smartly enough).

(Note: I have approximated Q4’2009 data from the previous industry and Nokia’s sales data, as Nokia has still not released its Q4’09 results)

The above graph depicts the consistent market share for Nokia worldwide but there is a dip in market share in 2009 coupled with the industry de-growth in late 2008 and early 2009 due to the recession slowing the growth prospects of mobile devices industry.

There are more causes of this dip in performance from the world leader. Let’s see where it has lost its market share, though there is no prize for guessing but let us confirm it…

It’s crystal clear from the above depicting NOKIA’s dominance everywhere accept North America. The contribution to the sales in North America has been decreasing dramatically for Nokia from the year 2002 with a whopping 35% market share (leader) to 2009 with a below par 8%.

What has been the main reasons in such a developed market like North America where Nokia is not a King?

Why isn’t it able to leverage from its earned brand equity all over the world here in American Markets. Is it the product portfolio or the operator partnerships or the go-to-market sales strategy or the right attitude or not understanding the North American consumers ?

Issue 1: Birth of Smartphones and Apple iPhone …

The smartphone growth driven by Blackberries and the Palms of the world in mid 2000s was suddenly accelerated with the Apple’s first step into mobile phone industry with iPhone, leveraging from its iPod success. This certainly made it difficult for Nokia, as Apple with its good core hardware design, a mind-blowing touch screen interface coupled with the applications and the app store revolutionized the smartphone market. Smartphone was now smarter and available for the local consumer(apart from Blackberries which had more of enterprise users) creating a breathtaking user experience. Nokia had no answer to the iPhone’s success which slowly was competing with it’s high end product offerings and captured the growing smartphones category along with the incumbent Blackberry.

If we look at a snapshot of Year-on-Year growth of smartphones market-share globally, the situation is evident: Nokia losing worldwide market share in smartphones category from 51% down to 40%. We all know this is the most must have category in any product portfolio with a 15% year on year industry growth. Also, dominance in this category will dictate who will be the global leader in mobile industry in coming years as the advanced wireless networks (HSPA+, WiMAX, LTE) are designed for such data hungry devices.

Issue 2: Product Mix:

Continuing with the discussion on Smartphones. Though Nokia is at 46% in global share of its Symbian OS and 40% in global share of its smartphones handset sales, the Symbian OS is equipped with far more clunky user interface and lacks the power of applications which the iPhone ecosystem has in store for the users. Nokia should come up with new smartphone devices available to enterprise as well as normal consumers. The likes of Nokia E71x, N97 and N900 should enhance the Nokia’s product portfolio in North America.

Almost two of the top three operators in USA runs on CDMA technology (Verizon Wireless & Sprint-Nextel). Nokia never had a focus on CDMA handsets in its portfolio especially in North America. CDMA based handsets comprises to only 14% of the entire Nokia’s mobile handset sales. So this explains its focus on the inclusion of CDMA driven handsets in its portfolio.

Issue 3: Mobile Operator Partnerships & Go-to-Market Sales Strategy

I believe this is the key to Nokia gaining market share in North America. “Subsidy” to the end users has been the primary factor in driving any handset sales here and mobile subscribers are habituated to it… But, almost 70% of Nokia’s worldwide sales is based on a “direct buy” go-to-market strategy. If a subscriber needs a handset he pays around $100-$300 for a multimedia phone or even $400 to $500 for a smartphone(high-end) and in many of the bigger Asian markets like India & China, Nokia sales are direct without any subsidy to the subscribers.

In North American markets this sales strategy won’t drive sales but only stronger partnerships with the tier one mobile operators will boost Nokia ‘s handset sales. Nokia has to be flexible on their price points in North American markets and develop relationships with these operators. The recent success of Nokia E71 series with AT&T is the biggest example for Nokia maintaining the 8% market share almost flat compared to 2008. Nokia should come up with a CDMA/EVDO based smartphone especially to tackle the Korean handset companies (Samsung & LG) who have also captured Nokia’s market share over these years and topped the market share charts in North America.

I can perfectly relate myself to this situation. I have always been a hardcore NOKIA fan. I always bought expensive Nokia N Series phones costing between INR15000 to INR250000 ($300 to $500) back in India but after coming to USA and bitten by the subsidy bug, it lured me into choosing iPhone 3G($100) offered via AT&T in lieu of buying an unlocked Nokia 5800 for $400 !!

Issue 4: Understanding American Consumers

Along with the subsidy game and touching consumers on price points, Nokia failed earlier in understanding the tastes of American consumers instead mass producing devices for the global market to save on production costs. The lack of flip phones, smartphones with QWERTY keyboard catching up with the “texting” trends and touch screens coupled with great social networking, navigation , utility applications creating a great ease and user experience.

Road to the Throne !!

Summing all this up, Nokia does have lots of issues on its plate but at the same time it does possess the technical as well as business capabilities, brand equity, capital to make amends. Already there has been a lots of improvements strategically from Nokia’s end.

Nokia is revamping its North American operations to collaborate more closely with the major American operators. AT&T this year will begin billing customers who use Nokia services branded as Ovi. Those customers will no longer receive a second bill from Nokia. And in Canada, the network leader, Rogers Communications, is making it easier to access Ovi Maps and N-Gage game services on two Nokia models.

There are huge plans to revitalize the Nokia’s Ovi App store and with the acquisition of Navteq GPS will help it leverage significantly. In a recent move by Nokia in offering a free turn-by-turn navigation tool for lifetime inbuilt in to its smartphone, it will revamp the location based services roadmap.It is available for 74 countries, in 46 languages, and with traffic information for about 10 countries and detailed maps for more than 180 countries to start with.

The another big step Nokia has taken a leap in is its R&D expenses. It has rose from 5% in 2007 to 12.5% in 2009 which should lay a strong roadmap for new product lines and diverse product portfolio. Nokia has worked on its product’s form factor, touchscreen capabilities, inclusion of latest social networking and utility apps, an intuitive mobile web experience and QWERTY keyboard capabilities with their Nokia N900 & Nokia N97 editions. Not sure whether any North American operators have announced their newer commitments with Nokia , but soon we should expect.

Nokia is also planning to make Symbian an open source platform enabling a future full of broader capabilities and a deeper reach in the North American markets .Thus, developers will have access to every single line of code, in other words, to an Open Source operating system, and we would be able to see many more APIs, and induction of more and more functionality to the programs.

To round up, It’s a long way to go for NOKIA in regaining the North American throne. It’s going to be challenging and worth watching. At the same time, all eyes are now stuck on the fiercest battle between smartphone OS’s in the war of smartphones:

Symbian Vs. Blackberry Vs. Apple OSX Vs. Android Vs. Palm OS Vs. Win Mobile.

– Neil Shah

References:

Nokia Quarterly and Annual Reports 2006/2007/2008/2009 Yankee Research: The Battle for Smartphone OS Supremacy

AT&T upgrading to HSPA+ but will it ensure reliability??

January 20, 2010Please keep visiting for insights, analysis, discussions on wireless technologies, business and trends now at shahneil.com

————————————————————————————————————————

Stephen Lawson of IDG News Service recently mentioned in his article why AT&T needs to spend $5 Billion on its wireless network. I agree with him on this as AT&T has to catch up with the coverage offered by Verizon Wireless.

Though AT&T boasts of the fastest 3G Network and it might be too, but customer satisfaction and connection reliability index especially in urban areas are the two main reasons which might blur AT&T’s image. And with inclusion of bandwidth hungry smartphone (iPhone primarily) users in its portfolio, loading their networks and juicing out the backhaul, situation might get out of control for AT&T unless they start acting on it. Apart from the loading the other important factor which I mentioned earlier is coverage which affects the reliability.

Issue 1: 3G Speed & Reliability Tests

AT&T’s 3G network is based on HSPA (High-Speed Packet Access) and an upgrade to HSPA+, a system designed to deliver as much as 7.2M bps (bits per second). Verizon uses EV-DO (Evolution-Data Optimized), which that carrier said offers as much as 1.4M bps in real-world performance. The speed of the network for individual subscribers depends on a variety of factors. But what matter here is the reliability along with the speed. The PC World test, conducted by Novarum last year, found mixed results for network speeds among AT&T, Verizon and Sprint but showed AT&T in last place for reliability in all 13 cities tested.

The above analysis puts light on The “reliability” score depicts the percentage of the tests in which the service maintained an uninterrupted connection at a reasonable speed (faster than dial-up) for Verizon, Sprint and AT&T in 13 different cities.

Issue 2: CAPEX on wireless infrastructure

Recent reports from TownHall Investment Research depicts that AT&T is short on CAPEX behind key competitor Verizon and Sprint on its Wireless infrastructure. AT&T’s capital expenditures on its wireless network from 2006 through September 2009 totaled about $21.6 billion, compared with $25.4 billion for Verizon and $16 billion for Sprint (including Sprint’s investments in WiMax operator Clearwire). Over that time, Verizon has spent far more per subscriber: $353, compared with $308 for AT&T. Even Sprint has outspent AT&T per subscriber, laying out $310 for network capital expenditure. That investment shortfall has been the major cause of AT&T’s poor network performance, which has been reflected in tests by Consumer Reports and PC World

The other issue is AT&T invests more in its wired infrastructure than in its wireless network, even though the wireless business contributes a majority of the carrier’s profit. AT&T gets 57 percent of its operating income from wireless and only 35 percent from wired services, but wireless only gets 34 percent of the capital expenditures, with the wired network taking up 65 percent of that spending.

Issue 3: Backhaul Capacity

Along with invest in upgrades to HSPA 7.2 in the base stations, AT&T needs to remove the backhaul bottlenecks to accommodate high speed data in the core. The backhaul limiting the speeds is the primary concern as I mentioned in my previous post for operators choosing the right backhaul solution considering the capex/opex. The $5 billion investment gap could expand to $7 billion because of the need for new backhaul capacity to link AT&T’s wireless network into the wired Internet.

Issue 4: Old Infrastructure

Another looming problem for AT&T is that its E911 emergency calling system, which works on its older GSM (Global System for Mobile communications) network, hasn’t been adapted to use 3G and is unlikely to make the migration soon. That means AT&T will have to maintain that old network for the foreseeable future, including possibly more capital investment for more power-efficient GSM equipment.

Solutions:

Hot on the heels of T-Mobile USA’s announcement that it upgraded its 3G footprint to HSPA 7.2, AT&T Mobility said it upgraded its own 3G cell sites across the country with HSPA 7.2 software. However, AT&T clarified that it is still working to deploy increased backhaul capacity to the sites, a job that it will continue into 2011. With this the customer experience will definitely get a boost with improvement in consistency in the data sessions access. So apart from base station upgrades and increasing backhaul capacity AT&T needs to add more number of base stations especially in the urban areas where the user confidence level is shaky and expand their coverage. AT&T has already started taking some smart steps by moving the 3G service to its longer range 850MHz radio band in the San Francisco area which seems to have helped coverage there, and the company will probably take that strategy nationwide while testing coverage in specific areas and “surgically” increasing capacity.

So the ball is in AT&T’s court and they have to act, spend and expand !!

–

Neil Shah

References:

Analyst: AT&T Needs to Spend US$5B to Catch up by Stephen Lawson, IDG News Service

A Day in the life of 3G: Mark Suvillan, PC World

AT&T plans to double 3G speeds Ian Paul, PC World

AT&T upgrades cell sites to HSPA7.2 software: by Phil Goldstein, Fiercewireless.com

Location Based Services Part II: LBS Network Architectures

January 12, 2010Please keep visiting for insights, analysis, discussions on wireless technologies, business and trends now at my new blogsite shahneil.com

————————————————————————————————————————

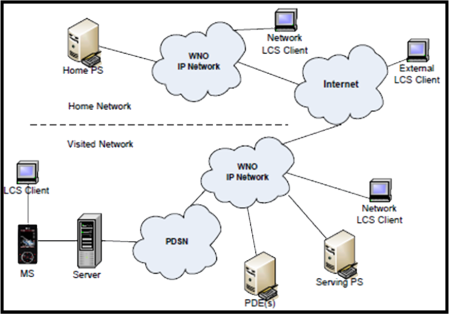

In the previous blog LBS Part I we discussed about the different Location technologies and their comparisons on different parameters with their advantages/disadvantages. Today we will see how these positioning technologies integrate with the network architecture in different Wireless Standards (3GPP, 3GPP2, OMA, WiMAX, LTE)

We will first start with categorizing the location services by their usage as follows:

The above four categories can be practically implemented in the way the MS communicates through the network with the location server.

The Wireless operators seeing the significant value in LBS delivering a solid ROI, the operator’s engineering team must select one of the two possible deployment methods.

It can be implemented in either Control Plane or User plane mode. Each has its own advantages and disadvantages. This “control-plane” approach, while highly reliable, secure, and appropriate for emergency services, is costly and in many cases, overkill for commercial location-based services. In both 3GPP & 3GPP2 an IP based approach known as “user-plane” allows network operators to launch LBS without costly upgrades to their existing SS7 network and mobile switching elements

1. Control Plane Architecture

The Control plane architecture consists of following core entities:

- PDE/SMLC: Position Determination Entity/Serving Mobile Location Center -PDE facilitates determination of the geographical position for a target MS. Input to the PDE for requesting the position is a set of parameters such as PQoS (Position Quality of Service – Accuracy, Yield, Latency) requirements and information about the current radio environment of the Mobile Station (MS)

- MPC/GMLC: Mobile Positioning Center/Gateway Mobile Location Center – MPC serves as the point of interface to the wireless network for the position determination network. MPC serves as the entity which retrieves, forwards, stores, and controls position information within the position network. MPC selects the PDE to use in position determination and forwards the position estimate to the requesting entity or stores it for subsequent retrieval.

- LCS Client: LCS client is a logical entity that requests the LCS server to provide information on one or more target MS. LCS client being an logical entity can reside within a PLMN, or outside the PLMNs or even in the UE

- Geoserver, LBS applications, SCP Service Control point and content

In this configuration, the MPC/GMLC effectively serves as the intermediary and gateway between the applications, running in the Web services space, while the PDE/SMLC runs in the signaling space. It serves as a holding agent for subscriber location information working with MSC<->VLR<->HLR and facilitates push and pull transactions. A “push” transaction might be an application that locates a subscriber and delivers a message, perhaps about a sale at a store nearby, while a “pull” transaction would consist of the subscriber invoking a service, such as Find my Nearest ATM machine. The service set-up and communication is performed via traditional signaling network. The MPC/GMLC also serves as a place to perform general administration functions, such as authentication/security, privacy, billing, provisioning, and so on.

Let us consider an example of position request flow between different entities. This shows an network initiated location request from the LCS in C-plane LBS Architecture.

These type of requests initiated from network side are mostly for network performance measurements, emergency services or for push services querying the MS location.

2. User Plane Architecture

The User Plane consists of following entities:

PS: Position Server – PS provides geographic position information of a target MS to requesting entities. PS serves as the point of interface to the LCS server functionality in the wireless packet data network. PS performs functions such as accepting and responding to the requests for location estimate of a target MS, authentication, service authorization, privacy control, billing, and allocation of PDE resources for positioning.

PDE: Position Determination Entity

The User plane architecture is similar to control plane but does not include the full functionality of the MPC/GMLC. Instead it allows the handset to invoke services directly with the trusted location applications, via TCP/IP, leaving out traditional SS7 messaging altogether. A scaled-down version of the MPC/GMLC handles authentication/security for the user-plane implementation approach. This method is focused on pull transactions, where the subscriber invokes a location-sensitive service. However, push transactions are possible and supported through the limited MPC/GMLC function. The User plane involves following entities

Let us consider an example of position request flow between different entities. This shows a handset initiated location request from the LCS residing in MS in U-plane LBS Architecture.

These requests are initiated from mobile station mostly for location based search requests like restaurants, navigation or for pull services querying the position server.

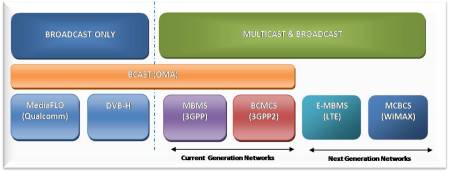

3. OMA (Open Mobile Alliance) U-Plane Architecture

Open Mobile Alliance (OMA), a mobile communications industry forum is created to bring open standards, platform independence, and global interoperability to the LBS market. More than 360 companies are represented in OMA, including MNOs and wireless vendors, mobile device manufacturers, content and service providers, and other suppliers.

The OMA User Plane consists of following entities and protocols.

- MLP: Mobile Location Protocol: MLP is a protocol for querying the position of mobile station between location server and a location service client

- RLP: Roaming Location Protocol: RLP is a protocol between location servers while UE is roaming

- PCP: Privacy Checking Protocol: PCP is a protocol between location server and privacy checking entity

SUPL (Secure User Plane Location):

SUPL is developed by the Open Mobile Alliance. SUPL is a separate network layer that performs many LBS functions that would otherwise be governed within the C-Plane, and is designed to work with existing mobile Internet systems. With SUPL, MNOs can validate the potential of the LBS market with a relatively small budget and few risks. SUPL utilizes existing standard to transfer assistance data and positioning data over a user plane bearer. SUPL is an alternative and complementary solution to existing 3GPP and 3GPP2 control plane architecture. SUPL supports all handset based and assisted positioning technologies. SUPL is data bearer independent.

SUPL architecture is composed of two basic elements: a SUPL Enabled Terminal (SET) and a SUPL Location Platform (SLP)

- SUPL Enabled Terminal (SET): The SET is a mobile device, such as a phone or PDA, which has been configured to support SUPL transactions.

- SUPL Location Platform (SLP): The SLP is a server or network equipment stack that handles tasks associated with user authentication, location requests, location-based application downloads, charging, and roaming.

SLP consists of following functional entities,

- SUPL Location Center (SLC) coordinates the operation of SUPL in the network and manages SPCs.

- SUPL Positioning Center (SPC) provides positioning assistance data to the SET and calculates the SET position.

The core strength of SUPL is the utilization, wherever possible, of existing protocols, IP connections, and data-bearing channels (GSM,GPRS,CDMA,EDGE or WCDMA). SUPL supports C-Plane protocols developed for the exchange of location data between a mobile device and a wireless network including RRLP (3GPP: Radio Resource LCS protocol) and TIA-8014(Telecommunications Industry Association 801-A, Position Determination Service for cdma2000). SUPL also supports MLP (Mobile Location Protocol) and ULP (UserPlane Location Protocol). MLP is used in the exchange of LBS data between elements such as an SLP and a GMLC, or between two SLPs; ULP is used in the exchange of LBS data between an SLP and an SET.

Let us consider an example of position request flow between different entities. This shows a SET initiated location request in OMA-SUPL U-plane LBS Architecture.

SUPL vs. C-Plane

Two functional entities must be added to the C-Plane network in order to support location services: a Serving Mobile Location Center (SMLC), which controls the coordination and scheduling of the resources required to locate the mobile device; and a Gateway Mobile Location Center (GMLC), which controls the delivery of position data, user authorization, charging, and more. Although simple enough in concept, the actual integration of SMLCs and GMLCs into the Control Plane requires multi-vendor, multi-platform upgrades, as well as modifications to the interfaces between the various network elements.

LBS through SUPL is much less cumbersome. The SLP takes on most of the tasks that would normally be assigned to the SMLC and GMLC, drastically reducing interaction with Control Plane elements. SUPL supports the same protocols for location data that were developed for the C-Plane, which means little or no modification of C-Plane interfaces is required. Because SUPL is implemented as a separate network layer, MNOs have the choice of installing and maintaining their own SLPs or outsourcing LBS to a Location Services Provider.

4. LBS Architecture in WiMAX

The WiMAX network architecture for LBS is based on the basic network reference model (NRM) specified by the WiMAX Forum. The model basically differentiates the network architecture into two separate business entities, (NAPs) Network Access Providers which provides radio access and infrastructure whereas (NSPs) Network Service Providers provides IP connectivity with subscription and service delivery functions.

The NAP is typically deployed as one or more access service networks (ASNs). The NSP is typically deployed as one or more Connectivity service network CSN(s). The NAP interfaces with the MS on one side and the CSN on the other.

Below shows the location request initiation from the application either located in device or network.

This is basically MS managed location service. The MS receives location requests from the applications and takes necessary measurements, and determines its location and provides it to the other requesting applications through upper layer messaging. The locations calculations at MS are aided by the periodic geolocation parameters broadcasted of the serving Base Station and the neighboring BS by the serving BS using layer 2 LBS-ADV message defined in IEEE 802.16-2009. The LBS-ADV message delivers the XYZ coordinates, the absolute and relative position of serving and neighboring BS allowing the MS to perform triangulation or trilateration techniques (either EOTDA or RSSI) and further aided by GPS to locate accurately. In this framework no major specific functional support for LBS is required in either the ASN or the CSN. Whereas in a network managed location service requires few functional entities to be added and enhancements to the network such as Location Requester, Location Server, Location Controller, and Location Agent.

Also, The WiMAX network architecture for LBS is designed to accommodate user plane, control plane, and mixed-plane location approaches. The big advantage of user plane location is that the LS can directly get to the MS, and signaling is minimized across the various reference points. However, for this to the happen, the MS needs to have obtained an IP address and be fully registered with the LS, and application layer support is required in the MS.

In contrast, for the control plane location, the LS does not communicate directly with the MS, and hence there is no hard requirement for the MS to have obtained an IP address. In other words, the control plane location approach relies more on the L2 connectivity of the MS. However, the signaling costs are generally higher in control plane location as the signaling will have to traverse multiple reference points before measurements can be obtained.

The mixed plane method is nothing but the LS invoking both control plane measurements and user plane measurements at the same time. The LS can then perform a hybrid location solution by combining the measurements to get much better accuracy for the location fix. This approach is also fully supported in the WiMAX network. The trade-off here is that this method costs a whole lot more in terms of latency for the fix and the associated signaling, however this will translate to much better accuracy for the MS location indoors where an insufficient number of GPS satellites may be visible

5. LBS in LTE

LTE also generally supports the same types of positioning methods (Cell ID, A-GPS, mobile scan report-based, and hybrid) as in WiMAX. LTE offers user and control plane delivery of GPS assistance data; WiMAX chose to provide only user plane delivery. The rationale was that rapid IP session setup with the LS offered by WiMAX minimizes the need for a control plane solution. In WiMAX, authorization and authentication for LBS service is provided by the AAA, whereas in LTE the gateway mobile location center (GMLC) provides the equivalent functionality. The LTE Location Services specification is being developed under the current work plan and targeted for 3GPP Release 9.

This sums up the Location Based Services Architecture covering 3GPP, 3GPP2, WiMAX, LTE and OMA standards.

In the next Part we shall cover the Use Cases, Business Model with current and future trends for LBS.

– Neil Shah

References:

3GPP TS 23.271, “Functional Stage 2 Description of Location Services (LCS)”; http://www.3gpp.org/

Open Mobile Alliance, “Secure User Plane Location V 2.0 Enabler Release Package”; http://member.openmobilealliance.org/

Etemad, K., Venkatachalam, M., Ballantyne, W., Chen, B.,(2009) “Location Services in WiMAX Networks”, IEEE Communications Magazine.

WiMAX Forum, “Protocols and Procedures for Location Based Services,” v. 1.0.0, May 2009.

OMA, O. M. (2007). Enabler Release Definition for Secure UserPlane for Location (SUPL) . OMA

Faggion, N., S.Leroy, & Bazin, C. (2007). Alcatel Location-based Services Solution. France.

3GPP2. (2000). Location-Based Services Systems LBSS: Stage 1 Description. 3GPP2 S.R0019 . 3GPP2.

3GPP. (2006). 3GPP TS 23.271 V7.4.0 Technical Specification Group Services and System Aspects Functional stage 2 description of Location Services (LCS) (Release 7).

Understanding the WiMAX Business Model

January 8, 2010Please keep visiting for insights, analysis, discussions on wireless technologies, business and trends now at my new blogsite shahneil.com

————————————————————————————————————————

Multimedia services and Internet applications have been the primary drivers in growth and demand of mobile broadband. It has ensured the operators to innovate and upgrade to newer technologies and architectures to offer services at lower cost but at the same time with improved user experience to the end users.

The transition to the next generation network has been already envisioned by the industry players and the move has been outlined to meet the set objectives. The higher level objectives include offering higher data rates, greater system efficiencies, increased data capacity, highly scalable and flatter all-IP architecture with successful interoperability with mobile devices across different networks and technologies. This leads to advent of next generation networks like Mobile WiMAX (Worldwide Interoperability for Microwave Access) developed jointly by IEEE and WiMAX forum based on IEEE802.16e-2005 global standard and LTE (Long Term Evolution) developed by 3GPP in its Release 8.

We will deep dive into the WiMAX business model analyzing the total cost of ownership, revenues and map the current state of WiMAX deployments around the world.

As a standards-based technology with wide industry support, a large ecosystem of developers, and a rapidly growing list of commercial installations, WiMAX stands to benefit from economies of scale and a vast embedded base of WiMAX enabled devices – driving down costs while spurring growth in subscriber adoption.

The other important factor operator is considering in how the platform fits into their existing short term and long term business model, measuring the total cost of ownership and with potential for harnessing time-to-market advantages to grow subscriptions and generate revenue. In the end, detailed business modeling customized to the operator’s market profile and service goals will provide the understanding of how to optimize the WiMAX investment to optimize the returns.

COSTS

We will first identify the Cost Model for WiMAX concerning the operator’s investment.

As always done we will break the cost into two major components:

1. CAPEX: Capital Expenditure

2. OPEX: Operating Expenditure

The initial investment on a WiMAX deployment focuses largely on capital components associated with procuring the necessary equipment throughout the network and systems architecture. With the introduction of WiMAX service and subscriber adoption with growing usage rates the operating expenses will consume the growing share of total cost of ownership. The end-to-end deployment and operational efforts contributes to the cost of ownership.

The Total Cost of Ownership (TCO) of WiMAX network = CAPEX +OPEX

The Capital expense normally consumes a larger percentage of the total costs but the operating expenses will outweigh the initial capital outlay over time. With WiMAX it is estimated that over the course of 6 years the capital expenses such as infrastructure, core and backhaul equipment will contribute to roughly 25%-30 of the TCO while the operating expenses including IT & operations site maintenance, device subsidies, support and administration will account to roughly 70%-75% of the TCO.

Operating costs can be expected to comprise the largest share of the cost of ownership.

Operators will need to pay due attention to deploying a WiMAX service network that can be readily operationalized with effective management capabilities and strong integration to the systems architecture.

WiMAX offers significant cost advantages in either greenfield or overlay installations over traditional cellular or broadband alternatives. The economics of WiMAX deployment has been demonstrated as favorable to markets as diverse as emerging markets with challenging price constraints seeking access to basic voice and data connectivity to mature markets seeking to enhance existing broadband services with mobile broadband applications.

As a licensed spectrum technology platform, WiMAX investment decisions are predicated by access to appropriately regulated spectrum. Almost three quarters of the spectrum allocated for WiMAX globally is focused in the 2.5 GHz and 3.5 GHz bands.

WiMAX networks deployed at 3.5 GHz may require almost 30% more sites for a given coverage area than a 2.5 GHz installation. The increase in sites at 3.5 GHz results in approximately 13% increase in total cost of ownership for the system over 2.5 GHz. Fixed costs common to both a 2.5 GHz and 3.5 GHz network including such operational line items as subscriber acquisition, systems integration and network management results in the 30% increase of sites to contribute only a 13% increase in cost of ownership. It is important to note that over time as capacity increases and the 2.5 GHz system requires investments in new build out earlier than the 3.5 GHz system – both the 2.5 GHz and 3.5 GHz system will demonstrate parity in cost of ownership.

REVENUES

The WiMAX architecture can realize host of rich Web-based applications and enhanced Internet services as well as operator managed “walled garden” services in the same network, allowing operators to explore creative service offerings and Internet friendly business models. This may include personal communications, mobile entertainment, mobile commerce, enterprise applications and a rich mobile web with connections across a landscape of devices. To complement that, the over-the-air activation protocols and associated network conformance testing and certification in the WiMAX Forum are structured to ensure successful network entry and provisioning of a variety of mobile Internet devices, including embedded communications devices and consumer electronics distributed through retail channels.

With the all-IP flat architecture in the entire service delivery value chain has changed the relationship between the operators and end user. There are different actors like content providers, advertisers, application service providers playing different roles and sharing the stage with the wireless operator. Operators are collaborating with these different actors in driving differentiation through content, applications and high level personalization of products and services. Thus by providing the different mix of value added services, devices and plans for different end-user segments operators may realize stronger growth, higher revenue (ARPU),greater market share( no. of subscribers) and a swift return on WiMAX investment.

FACTS & FIGURES:

Considering some trends and statistics of ongoing WiMAX deployments and subscriber acquisition throughout the world, we have following figures and growth projections:

Products

Lets have a look at some of the WiMAX Certified products from WiMAX vendors.

– Neil Shah

Femtocells & Relays in Advanced Wireless Networks

January 6, 2010With the huge growth of mobile phones complementing with a revolution wireless network technologies there has been a huge change in the consumer’s lifestyle and dependence on mobile phones. With the emergence of smart phones (mobile web) consumers are replacing not only their fixed lines but have started downsizing the number of personal computers in home. But they have far way to go as this demographic for this adoption is quite limited due to various factors. Fundamentally, consumers want great voice quality, reliable service, and low prices. But today’s mobile phone networks often provide poor indoor coverage and expensive per-minute pricing. In fact, with the continued progress in broadband VoIP offerings such as Vonage and Skype, wireless operators are at a serious disadvantage in the home.

Hence the wireless operators are looking to enhance their macro-cell coverage with the help of micro-cell coverages(indoor) deploying small base stations such as Femtocells or with the help of Relay technology.These miniature base stations are the size of a DSL router or cable modem and provide indoor wireless coverage to mobile phones using existing broadband Internet connections.

Pointing out some key advantages of Femtocells and Relays we will then focus on their adoption in advanced wireless networks(WiMAX and LTE)

FEMTOCELLS

Technical Advantages:

Low Cost: The Business Model would be initially by offering Femtos as a consumer purchase through mobile operators

Low Power: around 8mW- 120 mW lower than Wi-Fi APs.

Easy to Use: Plug-and-Play easily installed by consumers themselves

Compatibility & Interoperability: Compatibility with UMTS,EVDO standards and WiMAX,UMB & LTE standards

Deployment: In Wireless Operator owned licensed spectrum unlike WiFi

Broadband ocnnected:Femto cells utilize Internet protocol (IP) and flat base station architectures, and will connect to mobile operator networks via a wired broadband Internet service such as DSL, cable, or fiber optics.

With the above set up Femtocells solves following existing problems and extends the wireless coverage reach enabling newer applications and services

Customer’s point of view:

Increased Indoor Coverage: Coverage radius is 40m – 600m in most homes providing full signal throughout the household

Load sharing: Unlike in macro cells which supports hundreds of users, Femtos will support 5-7 users simultaneously enabling lesser contention in accessing medium delivering higher data rates/user.

Better Voice Quality: As the users will be in the coverage envelope and closer to Femtos, they will definitely be supported with a better voice and sound quality with fewer dropped calls

Better Data/Multimedia Experience: It will deliver better and higher data performance with streaming musics, downloads and web browsing with lesser interruptions and loss of connections compared to a macro-cell environment

Wireless Operator’s point of view:

Lower CAPEX: Increased usage of femtocells will cut down huge capital costs on macro cell equipments & deployments. This includes costs savings in site acquisitions, site equipments, site connections with the switching centers.

Increased network capacity: Increased usage of femtocells will reduce stress on macro cells increasing overall capacity of mobile operators

Lower OPEX: With lesser macro cell sites it reduces the overall site maintenance, equipment maintenance and backhaul costs.

Newer Revenue Opportunities: With provision of excellent indoor coverage and superior user experience with voice and multimedia data services operators has an opportunity of raising its ARPU with more additions to family plans

Reduced Churn: Due to improved coverage, user multimedia experience and fewer dropped calls, will lead to a significant reduction in customer churn

Technical hurdles:

Spectrum: Femtocells works on licensed spectrum and as the spectrum is the most expensive resource it will be a major technical hurdle for the wireless operator for frequency planning.

RF Coverage Optimization: Radio tuning and optimization for RF coverage in macro cells is manually done by technicians which is now not possible at each femtocell level, henceforth self optimization and tuning over time according to the indoor coverage map has to be done either automatically or remotely which is a technical challenge.

RF Interference: Femtocells might be prone to femto-macro interference and also femto-femto interference in highly dense macro or micro environments which might affect the user experience.

Automatic System Selection: When an authorized user of a femto cell moves in or out of the coverage of the femto cell – and is not on an active call – the handset must correctly select the system to operate on. In particular, when a user moves from the macro cell into femto cell coverage, the handset must automatically select the femto cell, and visa versa

Handoffs: When an authorized user of a femto cell moves in or out of coverage of the femto cell – and is on an active call – the handset must correctly hand off between the macro cell and femto cell networks. Such handoffs are especially critical when a user loses the coverage of a network that is currently serving it, as in the case of a user leaving the house where a femto cell is located

Security & Scalability: A femto cell must identify and authenticate itself to the operator’s network as being valid. With millions of femto cells deployed in a network, operators will require large scale security gateways at the edge of their core networks to handle millions of femto cell-originated IPsec tunnels

Femto Management: Activation on purchase and plug and play by end user is an important step and with a proper access control management allowing end-user to add/delete active device connections in the household. In addition, operators must have management systems that give first-level support technicians full visibility into the operation of the femto cell and its surrounding RF environment.

RELAYS:

Relay transmission can be seen as a kind of collaborative communications, in which a relay station (RS) helps to forward user information from neighboring user equipment (UE)/mobile station (MS) to a local eNode-B (eNB)/base station (BS). In doing this, an RS can effectively extend the signal and service coverage of an eNB and enhance the overall throughput performance of a wireless communication system. The performance of relay transmissions is greatly affected by the collaborative strategy, which includes the selection of relay types and relay partners (i.e., to decide when, how, and with whom to collaborate).

Relays that receive and retransmit the signals between base stations and mobiles can be used to effectively increase throughput extend coverage of cellular networks. Infrastucture relays do not need wired connection to network thereby offering savings in operators’ backhaul costs. Mobile relays can be used to build local area networks between mobile users under the umbrella of the wide area cellular networks

Advantages:

Increased Coverage: With multi-hop relays the macro cell coverage can be expanded to the places where the base station cannot reach.

Increased Capacity: It creates hotspot solutions with reduced interference to increase the overall capacity of the system

Lower CAPEX & OPEX: Relays extending the coverage eliminates the need of additional base stations and corresponding backhaul lines saving wireless operators deployment costs and corresponding maintenance costs. The relays can be user owned relays provided by operators and can be mounted on roof tops or indoors.

Better Broadband Experience: Higher data rates are therefore now available as users are close to the mini RF access point

Reduced Transmission power: With Relays deployed there is a considerable reduction in transmission power reducing co-channel interference and increased capacity

Faster Network rollout: The deployment of relays is simple and quickens the network rollout process with a higher level of outdoor to indoor service and leading to use of macrodiversity increasing coverage quality with lesser fading and stronger signal levels

On the other hand, a Type-II (or transparency) RS can help a local UE unit, which is located within the coverage of an eNB (or a BS) and has a direct communication link with the eNB, to improve its service quality and link capacity. So a Type-II RS does not transmit the common reference signal or the control information, and its main objective is to increase the overall system capacity by achieving multipath diversity and transmission gains for local UE units.

On the other hand, a Type-II (or transparency) RS can help a local UE unit, which is located within the coverage of an eNB (or a BS) and has a direct communication link with the eNB, to improve its service quality and link capacity. So a Type-II RS does not transmit the common reference signal or the control information, and its main objective is to increase the overall system capacity by achieving multipath diversity and transmission gains for local UE units.Pairing Schemes for Relay Selection

One of the key challenges is to select and pair nearby RSs and UE units to achieve the relay/cooperative gain. The selection of relay partners (i.e., with whom to collaborate) is a key element for the success of the overall collaborative strategy. Practically, it is very important to develop effective pairing schemes to select appropriate RSs and UE units to collaborate in relay transmissions, thus improving throughput and coverage performance for future relay-enabled mobile communication networks.

This pairing procedure can be executed in either a centralized or distributed manner. In a centralized pairing scheme, an eNB will serve as a control node to collect the required channel and location information from all the RSs and UE units in its vicinity, and then make pairing decisions for all of them. On the contrary, in a distributed pairing scheme, each RS selects an appropriate UE unit in its neighborhood by using local channel information and a contention-based medium access control (MAC) mechanism. Generally speaking, centralized schemes require more signaling overhead, but can achieve better performance

Relay Transmission Schemes

Many relay transmission schemes have been proposed to establish two-hop communication between an eNB and a UE unit through an RS

Amplify and Forward — An RS receives the signal from the eNB (or UE) at the first phase. It amplifies this received signal and forwards it to the UE (or eNB) at the second phase. This Amplify and Forward (AF) scheme is very simple and has very short delay, but it also amplifies noise.

Selective Decode and Forward — An RS decodes (channel decoding) the received signal from the eNB (UE) at the first phase. If the decoded data is correct using cyclic redundancy check (CRC), the RS will perform channel coding and forward the new signal to the UE (eNB) at the second phase. This DCF scheme can effectively avoid error propagation through the RS, but the processing delay is quite long.

Demodulation and Forward — An RS demodulates the received signal from the eNB (UE) and makes a hard decision at the first phase (without decoding the received signal). It modulates and forwards the new signal to the UE (eNB) at the second phase. This Demodulation and Forward (DMF) scheme has the advantages of simple operation and low processing delay, but it cannot avoid error propagation due to the hard decisions made at the symbol level in phase one.

Comparison between 3GPP LTE Advanced and IEEE 802.16j RSs

Below shows comparison between Type I(3GPP- LTE Advanced) and Non-Transparency(IEEE -802.16j) RSs

Technical Issues

Practical issues of cooperative schemes like signaling between relays and different propagation delays due to different locations of relays are often overlooked. If the difference in time of arrival between the direct path from source to destination and the paths source-relay-destination is constrained then relays must locate inside the ellipsoid as depicted below. Thus, in practice, such a cooperative system shoiuld be a narrow band one, or guard interval between transmitted symbols should be used to avoid intersymbol interference due to relays.

In band relays consume radio resources and Out of band relays need multiple transceivers.

References:

IEEE P802.16j/D9, “Draft Amendment to IEEE Standard for Local and Metropolitan Area Networks Part 16: Air Interface for Fixed and Mobile Broadband Wireless Access Systems: Multihop Relay Specification,” Feb. 2009.

S. W. Peters and R. W. Heath Jr., “The Future of WiMAX: Multihop Relaying with IEEE 802.16j,” IEEE Commun.Mag., vol. 47, no. 1, Jan. 2009, pp. 104–11.

Y.Yang, H. Hiu, J. Xu, G. Mao, “Relay technologies for WiMAX and Advanced Mobile systems” IEEE Commun. Mag., Oct,2009.

C. K. Lo, R. W. Heath, and S. Vishwanath, “Hybrid-ARQ in Multihop Networks with Opportunistic Relay Selection,” Proc. IEEE ICASSP ‘07, Apr. 2007, pp. 617–20.

Location Based Services Part I: Technologies in Wireless Networks

January 3, 2010Please keep visiting for insights, analysis, discussions on wireless technologies, business and trends now at my new blogsite shahneil.com

————————————————————————————————————————

Wireless carriers and their partners are developing a host of new products, services, and business models based on data services to grow both average revenue per user (ARPU) and numbers of subscribers. The main focus of the developer and user community is, on real world mobile web services in an emerging mobile application field namely Location Based services (LBS), which provide information specific to a location, and hence are a key part of this portfolio.

Definition: A service provided to a subscriber based on the current geographic location of the MS. Location-based services (LBS) provides service providers the means to deliver personalized services to its subscribers

LBS reflects the convergence of multiple technologies:

Internet – Geographic Information System – Mobile devices

Localization

Localization is based on analysis, evaluation and interpretation of appropriate input parameters. Most of them are related to exploitation of physical characteristics being measurable in a direct or indirect way.

From a physical localization point of view, there are three principle techniques to be distinguished:

1. Signal Strength & Network parameters:

The most basic wireless location technology is given by the radio network setup itself. Each base station is assigned a unique identification number, named CellID. The CellID is received by all mobile phones in its coverage area, thus the position of a target is derived from the coordinates of the base station. Signal strength could be used to reduce the target position. Wave propagation is highly affected by several factors, especially in urban areas, thus signal strength is altered and does not provide a reliable parameter for localization. Cell ID accuracy can be further enhanced by including a measure of Timing Advance (TA) in GSM/GPRS networks or Round Trip Time (RTT) in UMTS networks. TA and RTT use time offset information sent from the Base Transceiver Station (BTS) to adjust a mobile handset’s relative transmit time to correctly align the time at which its signal arrives at the BTS. These measurements can be used to determine the distance from the Mobile Station (MS/UE) to the BTS, further reducing the position error.

2. Triangulation/Trilateration:

In trigonometry and elementary geometry, triangulation is the process of finding a distance to a point by calculating the length of one side of a triangle formed by that point and two other reference points, given measurements of sides and angles of the triangle. Such, trigonometric methods are used for position determination. It can be distinguished as

- Distance-based (tri-)lateration (example: Global Positioning System, GPS), For distance-based lateration, the position of an object is computed by measuring its distance from multiple reference points

- Angle- or direction-based (tri-)angulation (example: AOA-Angle of Arrival, TOA-Time of Arrival,AFLT- Advanced Forward Trilateration, EOTD- Enhanced Observed Time Difference)

3. Proximity:

Proximity is based on the determination of the place of an object that is nearby a well-known reference place. Again, one distinguishes three fundamental sub methods:

- Monitoring and matching the terminal location with the database containing stamped locations(with RSSIs from different Base Stations)

- Monitoring of (WLAN Radio) Access Points. Here, it is evaluated whether a terminal is in the range of one or several APs.

Localization Categories

Different localization principles may be applied to gain position information with respect to an object that is to be tracked. Four different categories can be distinguished:

- Network-based: All necessary measurements are performed by the network (by one or several base stations). The measurements usually are sent to a common location centre being part of the core network. This centre takes over the final computation of the terminals’ positions

- Terminal-based: In the terminal-based localization approach, the terminal accounts for position determination.Disadvantages of terminal-based localization obviously are given by increased terminal complexity. Increased challenges with respect to calculation power and equipment lead to the assumption that this method is only partly applicable for legacy terminals.

- Network-assisted: Similar to terminal-based positioning, network-assisted positioning implies that the final calculation of the terminal’s position is taken over by the terminal.The difference is that possible assistance data is sent by the network. This can be done either on request or in a push-manner.

- Terminal-assisted: This too is a hybrid implementation of the other methods like above. The terminal hereby measures reference signals of incoming base stations and provides feedback reports to the network. The final position computation takes place in a central location centre within the network.

Accuracy increased with adoption of Hybrid techniques.

Types of Positioning Techniques:

So we can classify the existing location positioning techniques currently being deployed by wireless operators over the world as:

HSPA, EVDO, WiMax then LTE but what about the mobile backhaul??

November 2, 2009With HSPA, EVDO maturing, WiMax getting deployed and LTE getting ready to buzz around, it is soon changing the way mobile phones will access the networks. The bandwidth hungry new services, applications and the non-stop touch clicks on your smart handhelds are eventually going to obsolete these mature 3G networks. Whereas, the 4G access networks are definitely envisioned to control this ever-increasing wireless broadband traffic but what bout the evolution of backhaul?? Is it ready? or is it going to be a major bottleneck analogous to the traffic jams seen if only one lane was operating out of a four lane expressway.

So, let’s have a closer look on how the mobile backhaul network is currently positioned.

The trend below depicts the exponential growth in asynchronous data demand for next 5 years.

Mobile Traffic Projections for the next 5 years

Over the next few years, “user experience” will still continue to rely on 3G (and in some regions on 2G) technology.But for the mobile operator, LTE/WiMax is already part of the game plan. Operators have to learn the technology, and its impact on their networks, applications and service offering. Though, service providers are seeking revenue and profit growth through new differentiated packet-based services. Many of these services, such as mobile Internet and mobile TV, require high bandwidth—and the current backhaul infrastructure is not optimized for handling such traffic. Hence, providers have to add backhaul capacity while keeping operational costs under control, a situation that is forcing carriers to migrate their access and core networks to the new 3G and 4G infrastructure.

There are three main transport technologies in the backhaul arena – fiber, copper and wireless point-to-point microwave.

The costs of backhaul form a significant part of service providers’ revenue accounting for three quarters of mobile transport costs and 25-30% of total operating expenses. The 2G infrastructure carried voice traffic through switched TDM (T1/E1 or SDH/SONET) or ATM. As with 3G/4G services, already the bandwidth requirements have shot exponentially and to transport voice and data efficiently has been the need of the hour.

Basic requirements for a 4G Backhaul network:

1. Capacity: A single tail site should be scalable to 100Mbps+ capacities to avoid bottlenecks

2. Latency: A solution that supports 10msec or less end-to-end latency

3. All IP: Support IP traffic from head to tail.

Current migrating strategy is transporting Ethernet packets over point-to-point Microwave. Over 50% of all mobile backhaul deployments worldwide (and nearly 70% outside the U.S.A.), point-to-point microwave systems offer simple and cost efficient backhauling for voice and high-speed data services. That’s because point-to-point microwave supports higher data rates than traditional copper T1/E1 lines, it delivers between 25% and 60% more bits compared with similar TDM based systems, and easily overcomes the high cost and limited availability associated with fiber. Thus, operators can connect the TDM ports today, and gradually shift traffic to the Ethernet ports in the future. This shift can be done from remote, so no additional CAPEX or OPEX are needed. The industry has already established that the end game of next generation mobile backhaul networks is all-IP/Ethernet. Ethernet is not only more scalable, it also offers huge cost savings across the entire network value chain.

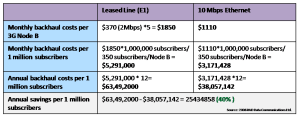

Ethernet cost savings per 1 Million subscribers

Also migrating to high capacity and lower latency Ethernet/All IP network, the systems should also support QoS aware Adaptive Coding and Modulation and Statistical Multiplexing. The former helps optimizing network for spectrum efficiency, increasing the radio capacity and thus reducing cost/bit and latter in optimizing traffic management over the network reducing congestion and improving efficiency. An IP over Ethernet infrastructure has the advantage of the bandwidth growth curve of Ethernet moving from 10 Megabits per second (Mbps) to 10 Gigabits per second (Gbps) today and 100 Gbps in future. This coupled with the decreasing cost of Ethernet ports provides growth opportunities with increasing economies of scale.

Ethernet microwave Vs. TDM microwave equipment cost comparison

Thus, of the three backhaul technology options operators can choose from, wireless point-to-point microwave can deliver the best cost-performance features, bringing faster ROI and driving forward the proliferation of advanced mobile services in the LTE/WiMax era. But in the longer run a hybrid solution of microwave, optical or IP/MPLS core might be seen as a balanced solution that might reduce the OPEX with improved scalability, higher bandwidth, lower latency and better efficiency. So operators pull up the socks and get ready for the great migration.

Also, a point to note with CISCO’s recent acquisition of Starnet Networks which makes it now one of the most dominant player in mobile backhaul solutions market.

From the recent news releases:

Verizon has committed to deploying fiber to 90% of the cell sites in its territory by 2013, closely following VZW’s LTE rollout schedule

Qwest plans to run fiber 7,500 to 17,000 cell sites in its territory

References:

“ATM to ALL IP” Cost effective Network Convergence – Tellabs ‘2009.

“LTE Backhaul Solutions”- Ceragon June 2009

Cable Backhaul: A towering OpportunityWebinar Harris Stratex Networks Nov’2009

Posted by Neil Shah

Posted by Neil Shah